Cognition in the noise

An interview with Professor André Fenton, New York University, conducted by April Cashin-Garbutt

How can we uncover the way we learn and know things? Recent SWC seminar speaker, Professor André Fenton, has spent his career focusing on the deviations from expectations, the noise that can’t be explained. In this Q&A, he shares how this approach has led him to a new paradigm for thinking about cognition.

What first sparked your interest in researching how we learn and know?

I was always interested in understanding how what we know is actually knowable. I was born in Guyana in South America and emigrated to Canada when I was seven. I remember being absolutely perplexed that many of things I knew, like social rules, rules of games, the things I found interesting in Guyana, were not valued, or sometimes even worse, were thought of as bad things to know. Every time I visited Guyana and then returned to Canada, this would switch back and forth.

This experience when I was young made me think about how we know what to know about. I became interested in understanding the subjectivity of reality. I couldn’t help but recognise that brains have to build their model of knowledge, what is knowable and what is real, and this is why I came to study the brain in the way that I do.

Why has it traditionally been assumed that neurons respond to external stimuli as if to represent them?

This is a very reasonable inference, especially if you are curious to know what this black box of the brain does. One thing you can do is manipulate things that are not in the black box, and if you believe in cause and effect, then you can manipulate what you imagine are the inputs and observe the consequences. This has been the tradition in neuroscience.

Scientists started by manipulating sensory input or manipulating neurons and observing the consequences in the periphery in muscles. Any reasonable person would start with the inputs and outputs. But the challenge becomes if you want to draw a causal chain and you recognise there is something called control or feedback built into such a system, so those inferences fall apart from even at the periphery.

From the very moment you get past the sensory neuron, it connects to another neuron, not because of the energy in the world that it is transducing, but because of the consequence of some presynaptic neuron. As soon as you recognise that, it becomes a question mark of whether the neuron will lawfully respond to the stimulus you can manipulate. The closer you are to the originating transducing neuron, the more likely that is, but somewhere in the middle of the brain like the hippocampus, is very far away from those stimuli.

The thing that John O’Keefe’s work communicated to me is that this idea of watching how sensation causes neurons to fire might be misguided. There are very good arguments and data to show that there has to be a model of the world that is used to interpret those stimuli. We know that even by looking at the way the retina allows us to have perceptions and sensations of things that aren’t actually that way. And so, then it becomes a matter of if you want to study this, it is best to understand, not just what the inputs are, but what the transformation of the inputs turn out to be. It is best to understand the system itself that is actively receiving those inputs, because that system is not passive, and its activity matters.

What made you realise that there could be another plausible model?

I’ve always paid attention to the measurements that we can make of neurons in the brain that don’t fit into the way we think about these systems. My career has always been characterised by paying attention to the deviations from the expectations, the noise you can’t explain.

When you pay enough attention to the deviations you start to think about how you can make sense of all of that. One way of trying to make sense of it is to reject the notion that the brain is passive, that the neurons are just waiting to respond to things, but instead have their own internal, historical, experiential reasons on different timescales for their activity. This is the paradigm in which I currently think about things.

How are you investigating which paradigm is most plausible?

In my laboratory we have very bright, creative people and what they do is come up with experiments all the time. My job is to critique those experiments and I usually do so in a way that is intended to get us to choose the experiments that have the best chance of changing how we think about something. If those experiments are designed to support something we believe, then I critique them so fiercely that they’re rejected eventually!

Picking experiments that challenge the way the field is thinking are the best. I like to say, “my opinions and your opinions don’t matter, let’s find what, as an enterprise, neuroscientists are thinking and design experiments that check whether we’re thinking about things correctly or not.”

Across what levels of biological organisation does your research cover?

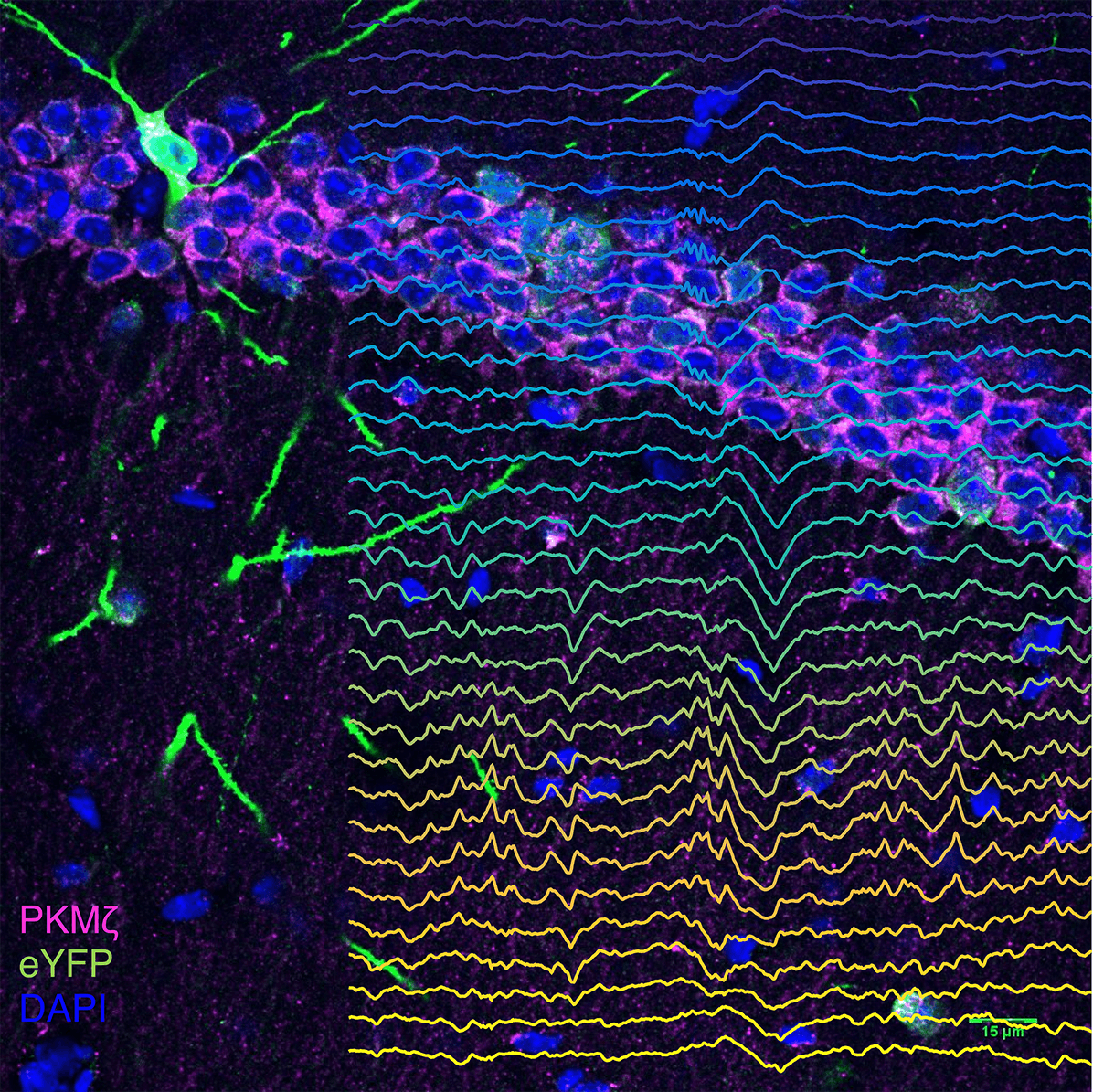

My research covers all levels of biology to the extent that we’re capable. We deliberately try to study a problem at every level of biology that we can. Obviously, that is hard. It used to be the case that it was experimentally hard to collect, for example, a transcriptome and proteome and the synaptic strengths amongst cell classes in particular pathways during learning or a memory recollection measured at the behaviour level.

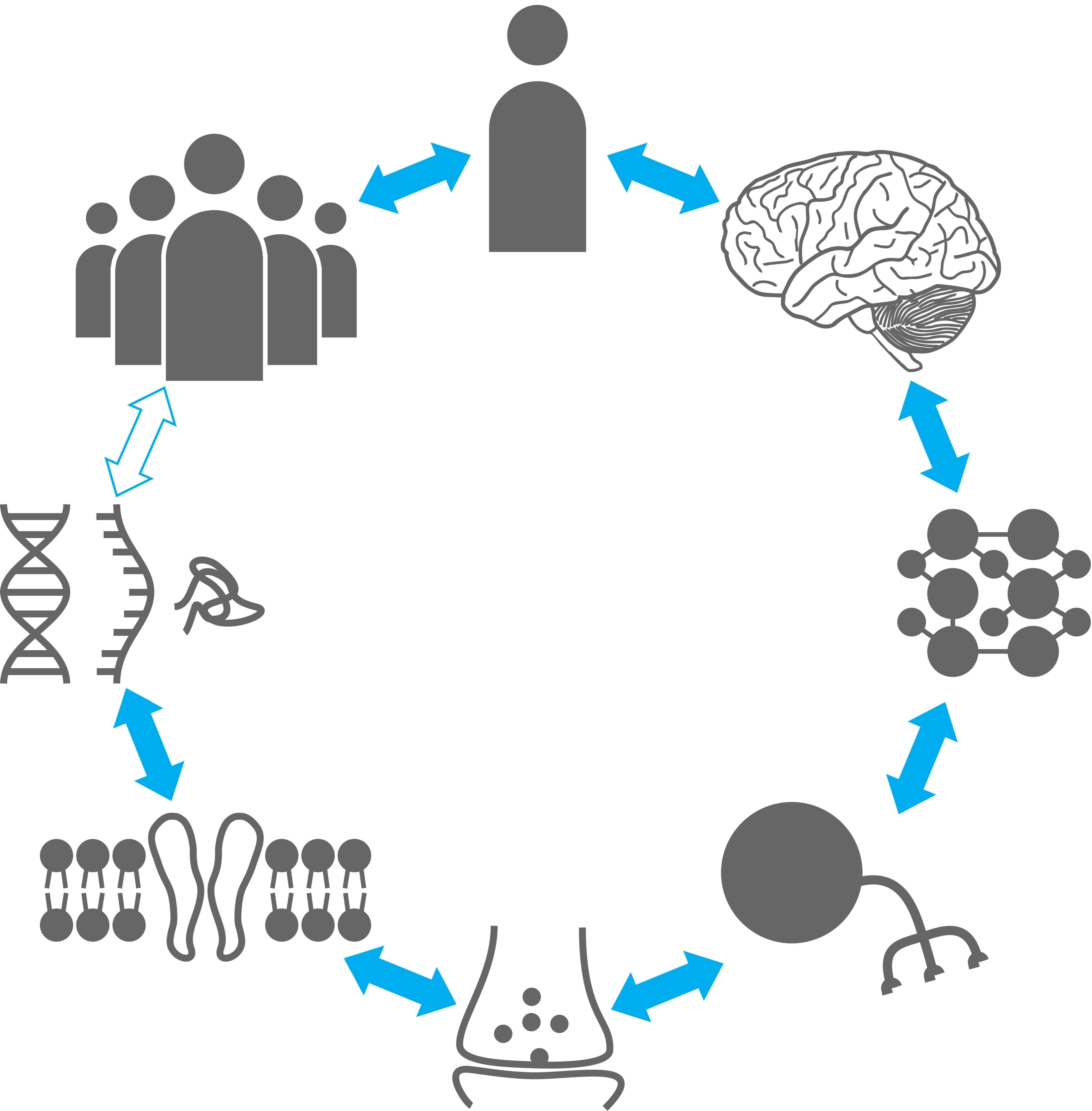

Circle of being

I often talk about the circle of being, which has different levels with a two-way arrow between every adjacent level. It looks like a circle because the social level is only correlated with the genomic level, but we try to make those inferences too.

We try to do experiments that I call “single subject experiments” that are designed to collect information across levels for example to understand how memories persist. What used to be hard was collecting the data, and that’s still hard but we can do it, now the hard part is how you integrate those data.

Imagine a transcriptome has 20,000 measurements and you can measure a transcriptome in different sub-regions of the hippocampus alone, so you have on the order of 100,000 gene expression measurements. You can also measure a proteome that has an order of magnitude less, so 2,000 proteins. Then you can measure 20 or so synaptic function variables at different input pathways in the hippocampus, so you’re now in the 10’s and you can measure 10 behavioural variables. So you have a statistical problem of how you relate the order 100,000 transcriptome measurements, with order 10,000 proteome measurements to the 10s of measurements at the level of synapses and behaviour.

Here we used machine learning tricks to interrelate those data sets and we have to zoom out to ask how does it all make sense? You can’t think of this as a system designed to solve one specific problem, at each level they have different utility functions or incentives at the level of the genome, proteome and so on. So we start to use game theory to try and guide what some of the rules are to move across the different levels of biology.

By the end of my career, I hope to make coherent sense of all of this. But overall we are trying to collect these single subject data sets and use game theory to guide how we think about the interactions, which we try to discover using machine learning and other bioinformatics approaches.

What key implications do you hope your research will have?

To me, the most interesting implication is abstract, it would shift the way neuroscientists think about neuronal representations so that they are an active part of the process of sensing, perceiving and computing an outcome rather than just a passive responder to stimuli.

More concretely, we do quite a lot of research where we make manipulations to learn how psychedelic drugs, or psychotogenic drugs like angel dust or ketamine, can change the organisation of neuronal activity or how genomic manipulations can mimic diseases of brain. What I’m hoping our research will show is that the consequences of these manipulations can be understood in this representational framework, where the disturbances of cognition can be understood, not as mere responses to the proximal manipulation, but a derangement or a fragility or excessive robustness or weakening of these representational motifs through which the individual interprets the world.

This is a very different way of thinking about disease and drug interactions, which are mostly thought about in terms of where the receptors are bound and what the target engagement is. Instead, this recognises there is a systems level neural coordination consequence that will allow some of these manipulations to be effective or non-effective. That’s a perspective I work hard at communicating.

What’s the next piece of the puzzle your research will focus on?

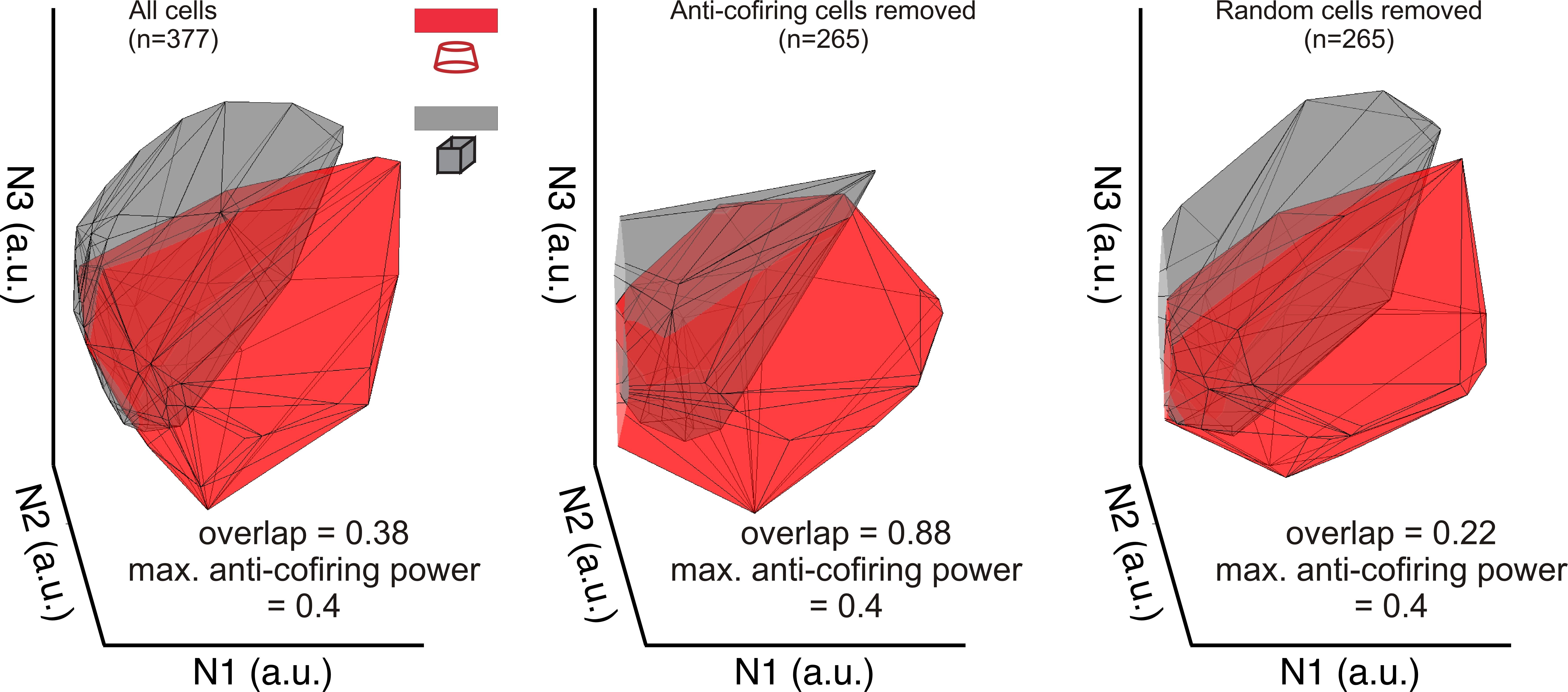

My research is going to focus on two basic questions. I want to understand the properties of anti-cofiring cells better and make manipulations of other cells to cause them to become anti-cofiring because that makes predictions about what the neural system will do.

On the other hand, to me the fascinating thing about the hippocampal entorhinal circuit, and why grid cells and place cells have captured people’s collective imagination, is that these are anatomically separated but linked parts of the brain that allow you to interpret the cognitive variables that they are processing. If you accept that each area, as its own network, is tuned somewhat independently to variables in the external world because they are governed by their own internal tuning or internal dynamics, then the very interesting problem becomes how do they talk to each other in a way that is generating coherent knowledge that the individual can use?

That’s the question we are pursuing, to try and understand how any pair of neural systems can talk to each other, given they are not just passive in responding to the concrete stimulus in the world and that they have an active neuronally idiosyncratic way of processing information. This makes it a challenge to think about how you go from one area to the next or from one group of cells to the next. This is the area we are working on.

About Professor André Fenton

André Fenton, professor of neural science at New York University, investigates the molecular, neural, behavioral, and computational aspects of memory. He studies how brains store experiences as memories, how they learn to learn, and how knowing activates relevant information without activating what is irrelevant. His investigations and understanding integrates across levels of biological organization, his research uses genetic, molecular, electrophysiological, imaging, behavioral, engineering, and theoretical methods. This computational psychiatry research is helping to elucidate and understand mental dysfunction in diverse conditions like schizophrenia, autism, and depression. André founded Bio-Signal Group Corp., which commercialized an FDA-approved portable, wireless, and easy-to-use platform for recording EEGs in novel medical applications. André implemented a CPAP-Oxygen helmet treatment for COVID-19 in Nigeria and other LMICs and founded Med2.0 to use information technology for the patient-centric coordination of behavioural health services that is desperately needed to equitably deliver care for mental health. André hosted PBS’ NOVA Wonders, and hosts “The Data Set” a new web series soon to launch on how data and analytics are being used to solve some of humanity’s biggest problems.