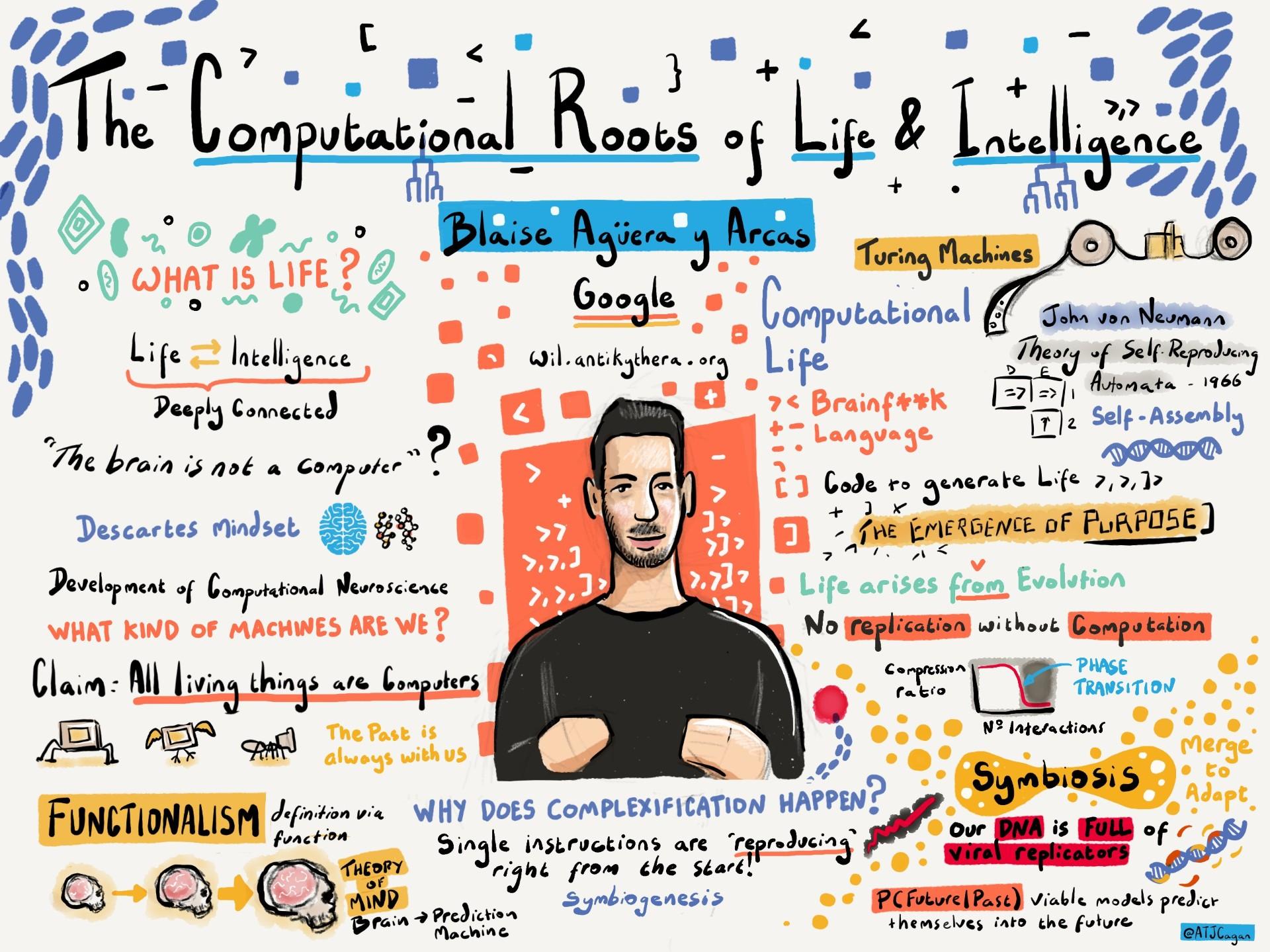

Could computation connect the origins of life and intelligence?

An interview with Blaise Agüera y Arcas, VP and Fellow at Google Research and Google’s CTO of Technology & Society, conducted by April Cashin-Garbutt

Many argue that the brain is not a computer, but Blaise Agüera y Arcas challenged this notion in his opening address at the SWC Lecture 2024. He explained how both life and intelligence are inherently computational and may even be selected for in the same way.

In this Q&A, Blaise discusses Turing and von Neumann’s foundational work in computer science. He also shares cutting-edge research in Artificial Life and the impact of these new perspectives on the origins of intelligence. Finally, he considers the potential of AI for transforming work and education, and the role neuroscience may play in shaping this future.

In the mid-20th century, von Neumann discovered that a system capable of reproduction and open-ended evolution is inherently computational. What does this mean for living systems in terms of intelligence?

The two fathers of computer science were Turing and von Neumann. In the 1930s, Turing came up with the Turing machine, which was an early mathematical model for what a computer does in some abstract sense.

Turing did this as a byproduct of proving that there are some questions you can’t answer. This was in response to the decidability problem, known as the Entscheidungsproblem, that David Hilbert had proposed as one of the great challenges of the foundation of maths.

Making proofs about what could be computed required defining an abstract machine to do computing, and that’s what the Turing machine was. I don’t think Turing originally thought of it as something that would actually get built, but it looked very much like the first digital computers that were built a few years later. These first computers were based on heads that moved back and forth on a tape, reading and writing symbols according to some rules.

Around the same time, von Neumann began to get interested in computing. This was towards the end of the 1930s. Von Neuman spent a lot of time at Los Alamos during the war effort, where he worked with a Polish physicist, Stan Ulam. Together they came up with the idea of cellular automata, which are 2-dimensional arrays of pixels that can compute simple functions and communicate with their neighbours.

The original idea behind cellular automata was to create a physical model of computing that would exist in the world, as opposed to just being an abstract machine. The board defines the universe, and elements of that universe interact and can compute.

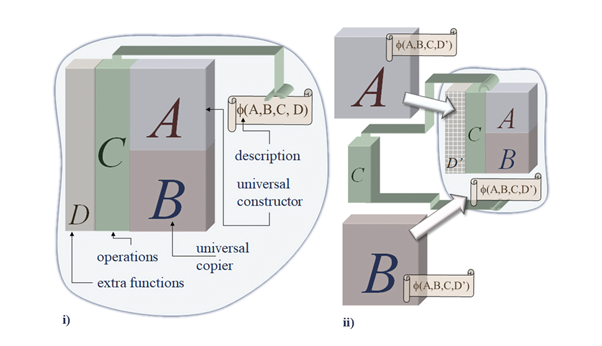

One of the questions that von Neumann posed was, “what would it take to have a machine that could make another copy of itself?” He thought about this not just in terms of computers, but how can anything make a copy of itself and be able to implement heritable changes in its own structure.

On the face of it, this seems like a really gnarly problem. How can a machine make another machine as complex as itself? But of course, this is exactly what all life forms must do.

What von Neumann realised is that it would only be possible to do this if the life form had a tape that acted just like the tape in Turing’s computing machine. Machine A would follow the instructions on the tape and build according to the instructions. Machine B would make a copy of the tape.

If the instructions for making machine A and machine B are themselves also encoded on the tape, then you have a self-replicator. And what von Neumann showed is that machine A is a Turing machine. Essentially it is a mathematical proof, that in order to replicate, you need to be a computer.

What is so interesting about this insight is that he had it a few years before the structure and function of DNA were discovered by Watson and Crick! He anticipated DNA. In nature, machine A is the ribosome, which follows instructions to assemble proteins, which we are made out of, and machine B is DNA polymerase, which copies the DNA tape. So, life works exactly as von Neumann predicted!

What have recent experiments in Artificial Life shown?

Artificial life is an old field, which began around the same time as the earliest computers and saw pioneers like Nils Aall Barricelli working with von Neumann on some of the very first artificial life experiments.

The field hasn’t had much attention, and it is a bit like where neural net research was until recently. We have now had Nobel prizes awarded for neural nets and I suspect Artificial Life will have its own renaissance in the near future.

In this last year, we have produced a very interesting Artificial Life result. We started with a system that can compute (which in that sense is no different to our universe with its laws of physics that allow for computation) and noise.

What we were able to see happen, is that noise results in replicators of the von Neumann kind. These programs learn to copy themselves without being seeded with any kind of pre-programmed replicator.

Essentially, programs write themselves in the presence of just a universe that can compute and noise. Basically, it is a demonstration of how evolution is at work even before there is biology. Evolution selects for things that can reproduce and can compute in the von Neumann sense.

Does synthetic life evolve in the same way as biological life?

Yes, I think so. One of my colleagues at Tufts University, Michael Levin, just solicited definitions of life from 80 researchers and found that they all submitted totally different responses. One biochemist has even written, “defining life is for poets, not scientists!”

My perspective is controversial, but my own belief is that life has nothing to do with the details of how biology happens to have worked out on our planet, I think it is much more generic.

Life is what happens in a universe where computation is possible, when things evolve to be able to replicate themselves and compute. I think life is a very generic phenomenon and, in that sense, synthetic life is no different to natural life.

What do these new perspectives mean for the origins of intelligence?

The perspective I gave at the SWC Lecture is that life and intelligence are very closely coupled in that they’re both computational. Once you have a machine A and a machine B and can replicate through computation, then you have a general-purpose computing device.

Evolution has something to say about this too. If you are able to copy yourself, but you are also living in a dynamic environment and especially one with other life in it too, then you’re going to want to respond to your environment and make predictions. You want to predict your own behaviour, the behaviour of others and how those interact.

Essentially, intelligence comes along with life and is selected for in the same way. I’m not even sure that they’re different: life and intelligence.

Do you think AI will ever be sentient? If we had an agreed definition of sentience, could you see a future where we had ethical frameworks for AI rights?

I think the systems that we’re developing now are not fundamentally different from us. But an AI rights framework doesn’t necessarily follow from that.

A lot of philosophers, such as David Chalmers, have a belief that rights, or moral patiency as it is usually called, are a consequence of something like consciousness. The big question is: is AI conscious? And if it is conscious, then maybe AI should have rights and moral patiency.

It’s interesting for us to have that belief because the whole concept of human rights is relatively recent. It wasn’t extended to all humans for a long time, as plenty of people were excluded. A lot of the struggles in the last two hundred years have been about racism, sexism, and the extension of rights to all humans.

The moral philosopher Peter Singer has written a lot about patiency for animals. The abortion debate is about patiency for fetuses. And these are very complicated questions, and I don’t think there is a science of it.

I think that the capacity to feel things is also emergent from evolutionary selection. For instance, Lars Chittka, an insect neuroscientist, published a book recently called The Mind of a Bee, which talks about bee cognition. The book makes it clear (to me, at least) that bees have subjective experiences and can feel pain.

I think almost everything that is alive can, and I’m not even sure single cells are exempt from that. Because if you are only going to survive by acting intelligently in the world, avoiding harm and going after pleasure like eating, then feelings are an obvious mechanism that emerges from that survival imperative. I don’t think there is anything particularly sanctified about that. There’s no special fairy dust that humans have that other animals and intelligent systems don’t have.

We’ve just done some psychological experiments with AI models to ask about theory of mind and also pleasure and pain. They’ve been trained on human data, and they’ve got all the things that we’ve got as far as we can tell. People often say they behave as if they have these things, but it is not real, it is just behaviour. But then what does it mean to be faking it and how can we prove that?

How much do you think we will be reliant on personal, AI-built avatars in the future? How soon do you think this will happen?

We built a prototype in my old team a couple of years ago called, “What did I miss?” You could send it to meetings on your behalf and ask it questions, and it would summarise the meeting. And so, in principle, I think this is easy.

The reason we are not seeing this everywhere yet is because today’s AI models are not quite reliable enough to be allowed to be let fully off leash. If they are doing things that are consequential, such as interacting with other people, you need to have the level of confidence in their performance that you would have in a really trusted person, and they’re not quite there yet.

To me, this seems less of a threshold and more of a hill that we’re scaling fairly rapidly. It is part of the same problem as hallucination. The early AI models hallucinated a lot, and they still do sometimes, but they have gotten a lot better over the past year. I am sure they will continue to do so.

What do you think AI holds for the future of education? Will AI lead to personalised digital tutors and is Google working on anything like this?

I can’t speak for the whole of Google, but we’ve had projects along these lines that I know of, and I am sure that are more that I don’t know of!

It is an obvious and powerful application, particularly in countries that are less economically developed and that have less access to human teachers. This is especially true for those places with limited access to tutors who can spend a lot of individual attention and have a high level of skill and knowledge in various domains that allow for that teaching to be of high quality and personalised. And so, I think it will be really important in those environments.

The typical follow-on question is: is that the end for teachers? I doubt it! What we face at the moment, is a great shortage of that sort of attention and so more AI help in these areas seems like an unmitigated good to me.

But we need to make sure the systems are more reliable than they are today. They can already be helpful in a variety of circumstances, but they can go off the rails, so I don’t think they are quite ready to scale-up or lean heavily on quite yet, but they are very close!

Some people ask is this a fundamental problem with transformer models, that they are not reliable, and we need some fundamentally new, innovation? I am sure we’ll have fundamental new innovations, but I don’t think it is a limitation of the models either. For example, I just took my first ride in a self-driving Waymo car a few weeks ago and I was very impressed! They are very safe and have been tested for tens of millions of miles. They have a really good record and are much safer than human drivers already.

Is Google focusing efforts primarily on products and if so, will academia need to take more of a lead on future research projects like AlphaFold?

I have seen a big turn in the direction of applied work and also of work that is less public. Google Research is where the transformer came from, as did a lot of other fundamental research in AI. The number of papers at NeurIPS (the conference on Neural Information Processing Systems) published by Google is huge, outnumbering the contributions of top universities in that field.

But I am actually very concerned about the lack of public investment and lack of government investment in basic research. During the heyday of government spending on basic research, it was a significant percentage of GDP in countries like the UK, the US. In some sense, we are still harvesting that.

Google Research, like Bell Labs and a few others, are exceptions in terms of companies doing lots of basic research, but it is so important for the government to invest in this too. I really worry about declining investment in science and basic research.

I worry also about the fact that big tech companies are now in a head-to-head race to work on products. There is an understandable shift in resources, given how high stakes that competition has become.

But I founded a new group over the last year to work on bleeding edge basic research. It is not about commercialisation or products at all, and is committed to publications, collaboration with academia etc. because I am concerned about preserving that.

How do you think neuroscience will shape the future of AI? Are there fundamental modular architectures of the brain that would be helpful?

A lot of the previous generation of AI came straight from neuroscience. For example, the rise of cybernetics and neural nets in the 1950s were inspired by cortex and convolutional nets were inspired by models of vision that came from Hubel and Wiesel.

The transformer is the first major evolution in AI that was not directly neurally-inspired. Also, there’s been so much progress in the last few years in AI that the shoe feels like it’s on the other foot. Neuroscience first inspired AI, but now AI is taking the lead and neuroscience is making hypotheses about how the brain works based on what seems to be working with transformers.

It is a dialogue. I think for decades the neuroscientists were ahead of the AI researchers and now the AI researchers, in some respects, are a little bit ahead of neuroscientists. But it will keep going back and forth. I am not even sure that transformers are as non-neural as they appear, and they may be closer to what is going on in the brain than we may yet realise.

About Blaise Agüera y Arcas

Blaise Agüera y Arcas is a VP and Fellow at Google Research, and Google’s CTO of Technology & Society. He leads an organization working on basic research in AI, especially the foundations of neural computing, active inference, evolution, and sociality. In his tenure at Google he has led the design of augmentative, privacy-first, and collectively beneficial applications and he is the inventor of Federated Learning, an approach to training neural networks in a distributed setting that avoids sharing user data. Blaise also founded the Artists and Machine Intelligence program, and has been an active participant in cross-disciplinary dialogs about AI and ethics, fairness and bias, policy, and risk. Until 2014 he was a Distinguished Engineer at Microsoft. Outside the tech world, Blaise has worked on computational humanities projects including the digital reconstruction of Sergei Prokudin-Gorskii’s color photography at the Library of Congress, and the use of computer vision techniques to shed new light on Gutenberg’s printing technology. In 2018 and 2019 he taught the course “Intelligent Machinery, Identity, and Ethics” at the University of Washington, placing computing and AI in a broader historical and philosophical context. He has authored numerous papers, essays, op eds, and book chapters, as well two books: a novella, Ubi Sunt, and an interdisciplinary nonfiction work, Who Are We Now?. His upcoming book, What Is Intelligence?, will be published by MIT Press in 2025.