Deep RL and the future of AI

An interview with Professor Jakob Foerster conducted by April Cashin-Garbutt

Deep reinforcement learning (deep RL) is currently undergoing a revolution of scale. This subfield of machine learning combines reinforcement learning and deep learning, and new frameworks are resulting in orders of magnitude of speed-up for many deep RL tasks.

This revolution is enabling researchers like Jakob Foerster, Associate Professor at the University of Oxford, to go after new questions spanning multi-agent RL, meta-learning, and environment discovery. In his recent SWC Seminar, Professor Foerster presented exciting examples from his lab and in this Q&A he outlines his vision for the future of AI, and the importance of open science.

Why is deep RL currently undergoing a revolution of scale?

This is largely driven by two factors coming together. Firstly, we have had the deep learning revolution that allows us to use graphics processing units (GPUs) efficiently for deep neural networks.

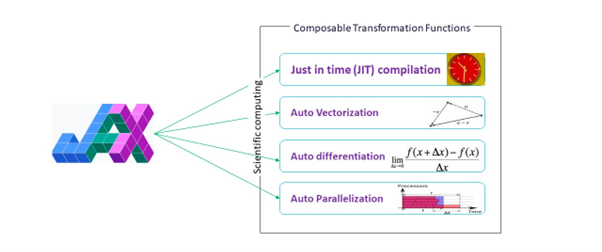

And recently, we've had an innovation in frameworks that can be used to write GPU-accelerated code in a way that's much easier and more scalable than previous frameworks. In particular, JAX is one of these options.

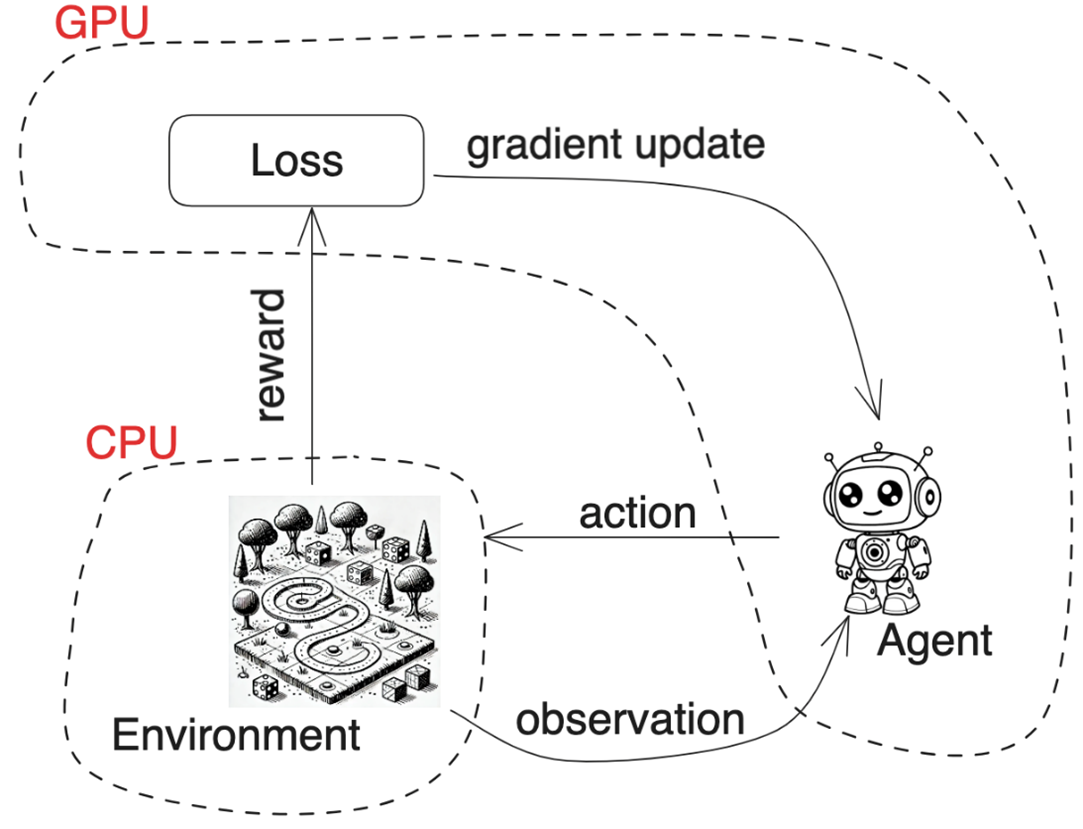

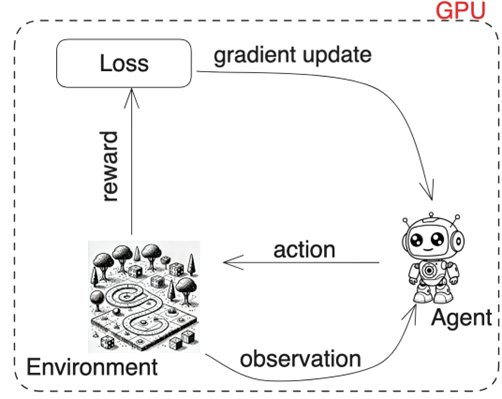

This allows us to write not just the algorithm in terms of the agent that has taken actions and learning, but also the environment in that same GPU-accelerated framework code. This means we can get rid of all the complexities associated with having to pass data between the GPU and the central processing unit (CPU), which makes algorithms slow and engineering-heavy.

In practice, this means that with academic scale, we can run experiments around 1,000 times faster than before. Research questions that needed a whole data centre to answer using the previous technology can now be done using a few GPUs in an academic lab.

How has your recent work been enabled by this revolution?

We've been able to pursue a lot of different areas that were out of the scope of academic labs until a couple of years ago. For example, we are aiming to develop better learning algorithms.

There is work to try to improve the way that agents can learn together in settings of conflicting interests, by one agent being able to shape or influence the learning behaviour of others.

I think these questions can also provide us fundamental insights not just into how we can improve learning algorithms, but also how we can get better insights into what kind of learning algorithms humans might be running in settings of multi-agent interactions.

What new questions does this hyperscale allow you to go after and which are you most excited about?

I think the key, central theme of our research is how do we make reinforcement learning sample efficient enough so we can deploy it in the real world. By sample efficient I mean we want a model to reach a certain level of performance with fewer samples of real world interactions.

This is currently a huge limitation, as current algorithms require engineering effort to make them operate for any new problem. Instead, we're searching for algorithms that are sample efficient, robust and generalise well enough so someone can use them off the shelf in a real world problem.

How do you ensure the scalable machine learning methods your lab is developing are safe?

The algorithms that we're working on are only going to be able to solve the tasks that they get trained on. They are not the extremely powerful, open-ended generative AI systems that come with their own set of safety concerns.

However, somebody could take these algorithms, and potentially they could also use them to optimise nefarious purposes.

To help counter this, we will be open sourcing everything we have. This will enable people working on cyber security and defences to see everything. It will help ensure we can protect our systems against these kinds of adversarial attacks.

This is the only hope I have for AI safety in the long term. Being able to use the collective intelligence of a lot of different scientists and researchers, and making science accessible to everyone. I think this is the best way forward for AI safety at the moment.

You’ve recently returned to Meta AI in a part-time position. Please can you tell us about the goals of the new team you are building to investigate multi-agent approaches to universal intelligence?

My own goals for moving to Meta are twofold. First of all, as a scientist at Oxford, I run a big lab, and there's a limitation in how much of my headspace I can put into any individual project. And while I'm a fairly hands-on supervisor, I do miss being really deep down in a hard problem and making progress with a team of peers.

Also, I fundamentally believe that open science is good for the world. And FAIR is one of the few places that goes after ambitious goals with large-scale resources and a lot of talent. I want to support this because I want to foster and strengthen the role of open science and AI technology in particular.

I'm not a big fan of the accumulation of power in closed-source AI companies. My goal for the team at Meta is to try and leapfrog the current closed source generative AI efforts by building new innovative creative approaches towards generalist intelligence.

What do you think the future holds for deep RL?

Firstly, I hope that we can use deep RL as a method that works reliably out of the box for solving useful tasks in the real world. And I think that will be exciting and worthwhile doing.

It will also be a very useful computational modelling tool for people trying to understand behaviours and learning processes. It will become a tool in the neuroscientific toolbox.

I think deep RL will also play an increasing role in addressing the shortcomings of current generative AI approaches and large language models, because these systems are not very good at decision-making or taking actions.

Reinforcement learning is all about taking actions in the real world. It will play an important role once all the current large language model approaches have been saturated.

What impact do you think our increasing understanding of biological brains will have on AI?

I think the impact hasn't been as large as one may have hoped. Some of this is probably because people tend to live in silos and we're really bad as an academic community at bridging the gap between different disciplines.

But I'm quite optimistic, and I hope that this might change. One of the reasons is that it is entirely conceivable that in a year we'll have tools that would allow me, as a scientist in machine learning, to translate a paper from neuroscience into terminology that matches my own understanding. Currently, the lack of progress has been around social structures and conventions which create barriers between communities. I think AI can really help address this.

There's another reason to think that there might be more progress as we're moving towards automating part of the scientific process. It might be that the next big breakthrough in machine learning comes out of neuroscience. But then, a machine learning system, not a machine learning person like me, will implement that breakthrough on a large-scale experiment.

I'm quite optimistic that we will see an acceleration of understanding of the brain leading to better machine learning leading to more understanding of the brain. It’s a loop – like using computer chips to build better computers.

Ultimately most revolutions in the world come from closing the loop – and I’ve been looking for how we do that. I think this is a good way to go, and it will accelerate not just our understanding of the brain, but also our ability to build smart systems.

About Professor Jakob Foerster

Professor Jakob Foerster leads the Foerster Lab for AI Research at the Department of Engineering Science at the University of Oxford. During his PhD at Oxford, he helped bring deep multi-agent reinforcement learning to the forefront of AI research and spent time at Google Brain, OpenAI, and DeepMind. After his PhD he worked as a (Senior) Research Scientist at Facebook AI Research in California, where he developed the zero-shot coordination problem setting, a crucial step towards safe human-AI coordination. He was awarded a prestigious CIFAR AI chair in 2019 and the ERC Starter Grant in 2023. More recently he was awarded the JPMC AI Research Award for his work on AI in finance and the Amazon Research Award for his work on Large Language models. His past work addresses how AI agents can learn to cooperate, coordinate, and communicate with other agents, including humans.

Currently the lab is focused on developing safe and scalable machine learning methods that combine large scale pre-training with RL and search, as well as the foundations of meta-and multi-agent learning. As of September 2024 Professor Foerster returned to FAIR and Meta AI in a part time position to help build out a new team investigating multi-agent approaches to universal intelligence.