How can the songbird model system help us understand the production of speech?

An interview with Professor Michael Long conducted by April Cashin-Garbutt, MA (Cantab)

Professor Michael Long, NYU School of Medicine, recently gave a seminar at the Sainsbury Wellcome Centre on uncovering circuit principles that enable robust behavioural sequences. I caught up with him to learn more about his work and the clinical implications for language development.

What first prompted you to focus on the songbird model system?

When I trained as a doctoral student we worked on brain slices and learnt a lot about how cells work and how they interact with other cells, but I became increasingly frustrated by a lack of behaviour. What I wanted to know was how all of these cellular mechanisms can be put into use for a complex behaviour.

What I love about songbird behaviour is that a small bird can listen to his father practise intensely for two or three months, almost a million trials, all by himself because there is an internal need to produce the song. It is critical that the male produces this behaviour so that the female can hear the song and decide whether to mate with the male or not.

This motor skill involves all of the muscles working in unison to hit all the notes and I think it has less to do with music and more to do with how to swing a golf club, as you have to coordinate lots of muscles.

I think that is what fascinates me about the songbird model. It has this perfect behaviour, which you can see bloom and you can understand all the wires and cells and properties that all can work together to bring you this critical behaviour in the bird.

In zebra finches, only the males sing and they can generate this precise behaviour from a distinct network of cells. So if you look at the brain itself, you’ll see the ball of cells involved in producing this before without even staining them and it is both a blessing a curse.

Humans differ as our cells are multimodal, which means we have cells that can be involved in many different behaviours. But the zebra finch model is a case where an elaborate but single behaviour, that takes a million practices to get right, is held in one part of the brain that you can really understand, which means we can figure out how it works.

How do we know that there aren’t other brain areas involved?

We know this from causal studies that have been done. There are two main lines of evidence. One is recording all over the brain, which could be done with electrodes, and showing that those cells are only active when singing and not active during other behaviours such as flying and so forth, and the other areas outside of this region are not active during singing.

One can also use the immediate early genes which can mark active neurons and these areas light-up like a Christmas tree and other areas don’t. This gives us a clues that these are involved in producing the song.

Then we ask: what happens when we turn these neurons off? If you turn them off, the bird can approach a female, but nothing comes out. This means that it is absolutely causal, because when you perturb them you see a real deficit in behaviour.

In humans, we would call this expressive aphasia. We have a lot of our brain tied up into speech production and if one has a stroke in those regions you can have expressive aphasia where you can’t produce words and this is analogous to these regions in the songbird.

Can you please outline the two distinct models for explaining the neural mechanisms underlying the production of the zebra finch song? How do you test these models?

There are many models and in my seminar at SWC I talked about our own network models, trying to explain how tens of thousands of cells all work together to produce this song. We worked together with computational neuroscientists to try different models and see how well they held up. There is a clear winner and it is not the one we thought it would be.

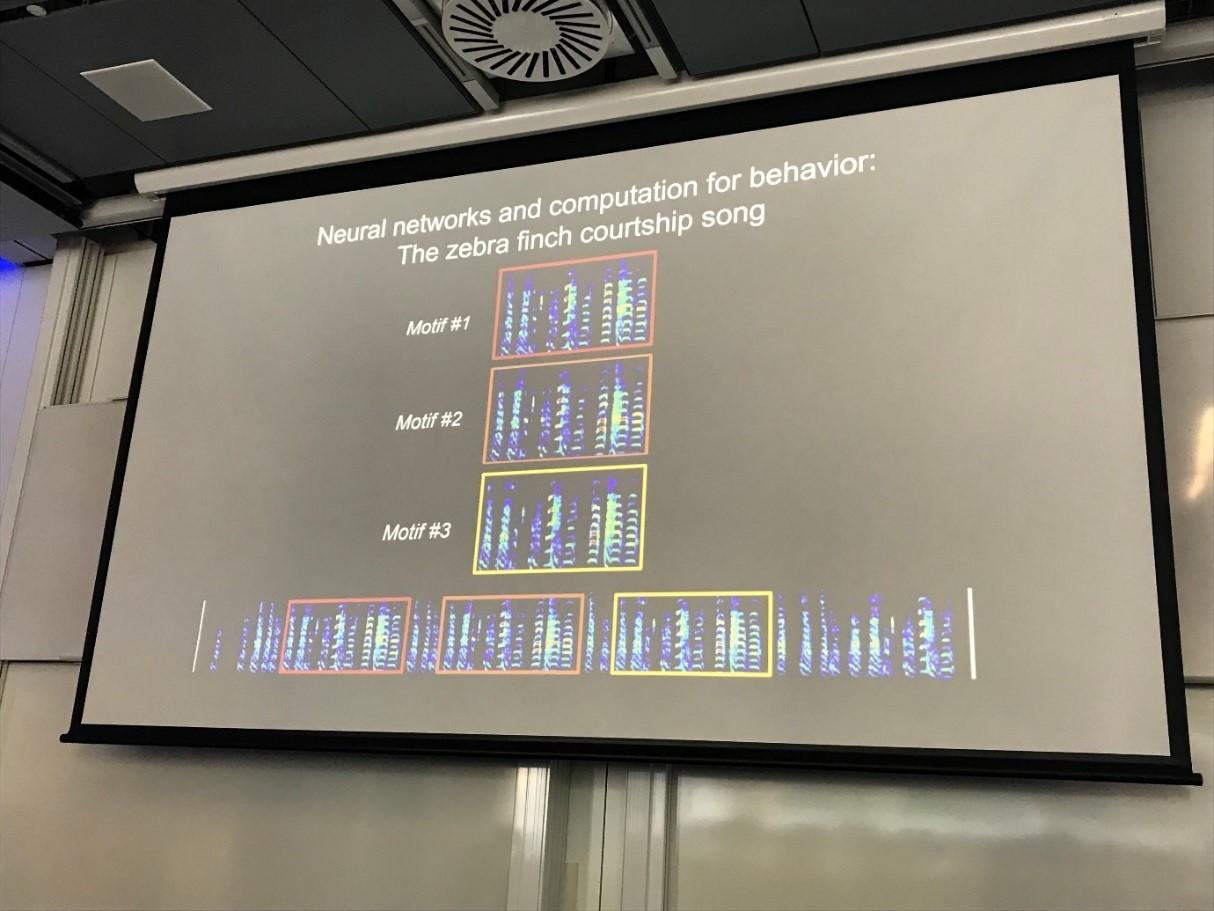

Previous work suggested a gesture hypothesis, where neurons in the pre-motor region in the bird only appear to be active at certain notes and so not all parts of the song are created equal. As an analogy, imagine playing the flute and trying to change note, you have to do a few things, for example you can change the amount of air you’re blowing into the flute or change the positioning of your fingers on the flute. The bird does the same thing, he’ll either change the musculature to achieve a different note with his vocal organ, or he’ll change the amount of pressure going into that organ.

There is a lot of technical difficulty in recording from these cells as the birds are very small and so only 5 cells were recorded from previously in the bird. These 5 cells lined up with the gestures, however, we thought there must be more than 5 as that is not enough data to make a conclusion.

What we decided to use is two-photon microscopy, which has bloomed into a really important tool for recording activity in neuronal populations. The problem with two-photon microscopy is we haven’t been able to teach a bird to sing under a microscope. However, I had a really creative student who developed an interface where the birds feel very comfortable singing under the microscope and now we can film hundreds of cells and now with our data and other groups, we had over a thousand neurons.

What we found is that the neurons are continuously active throughout the song, almost as if they are ticks in a clock and so you can think of this as a clock hypothesis.

Why is it important that the variables you use are behaviourally-relevant and how do you ensure this?

The great thing about this work is that it is never ambiguous, the bird either sings or he doesn’t. If you don’t make him sing, it doesn’t mean a thing!

If I go back to recording from a slice, I can learn an enormous amount about what that cell is doing or even how cells are interacting with each other, but to really understand how the system works we want to put this into a behaving animal that is performing the action. The bird has taught me that is the most critical piece, at least in my own work, for understanding how the brain works.

To not extrapolate widely from a reduced system, but instead to really come up with the most unbiased way of observing the whole circuit as it is fully engaged in a complex behaviour.

Does your work have clinical implications for language development in humans?

We’ve written three human papers in the last two years and we found one of these song-only regions, i.e. the pre-motor area, that we thought would be an important clock for driving the song. You can imagine if the bird is singing and has a metronome in its brain, it would be interesting to change the speed of the metronome and ask whether the song changes.

To test this idea, we built a small cooling device that goes on the head and lowers it down by a few degrees from 40 down to 32 degrees and the bird started to sing in slow motion. We found this very interesting and so we tested a few models in this way, but then we realised this could be clinically relevant as well.

If I’m going into the brain of a person with a tumour, typically you want to remove the tumour while leaving the rest of the brain unperturbed, because if you scoop out the language centre the person could wake up and be aphasic.

Typically what has been done since the 1930’s to identify these language centres is to take a stimulating electrode and move across the different parts of the cortex while the person is awake and by administering current in the right place at the right time, you see speech arrest.

People with epilepsy have hyper-excitability so this administration of current can cause them to have a seizure and in about 40% of cases this happens. So we thought why don’t we use our bird trick and go right into the human with a small handheld cooling device. The beauty about cooling is that you’re not exciting the tissue, if anything you are focally inhibiting the tissue.

What we found is that when we went over Broca’s region, which historically has been thought to be important in producing speech, people speak more slowly, just as the songbird sings more slowly. If we go one synapse down from there into the primary motor cortex, the speech falls apart structurally but without any time axis.

This disassociation not only taught us a lot about speech and what these two areas are doing and how these are separable, but it has also been useful clinically. We have done this on 22 patients and we’re now working out new ways of recording from these patients to see what single neurons are doing to build models for how humans are producing speech. This is something we’re very excited about.

I don’t want to study every animal in the pet store! I want to know how can this teach us about human brain function and it is surprising the parallel that exists.

About Professor Michael Long

Michael Long received his PhD from Brown University, where he worked in the laboratory of Barry Connors examining the role of electrical synapses in the mammalian brain. Dr. Long began work on the songbird model system during his postdoctoral years with Michale Fee at MIT.

Dr. Long moved to the NYU School of Medicine in 2010, where he is currently an Associate Professor and Vice Chair for Research of the Neuroscience Institute with a clinical affiliation in the Department of Otolaryngology.

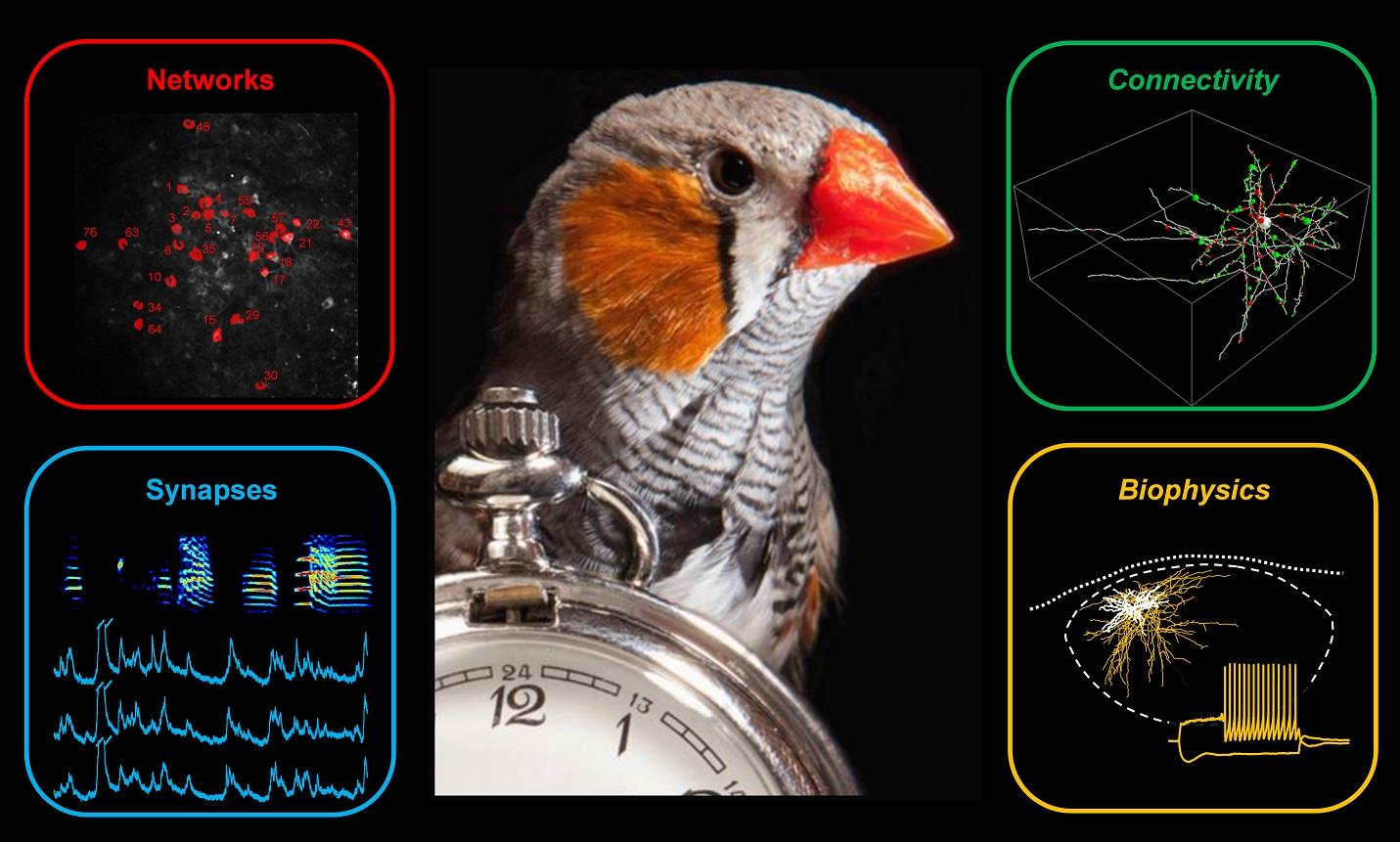

The Long laboratory combines a variety of fluorescence imaging, electrophysiological, and behavioural techniques to investigate the neural circuitry that gives rise to vocal production in the songbird as well as a non-traditional rodent species.

Through a variety of collaborations, Dr. Long has recently extended his findings into the clinical realm, with an emphasis on the brain processes underlying speech perception and production.