How do we experience time and form memories?

An interview with Dr Lucia Melloni, Group Leader at the Max Planck Institute for Empirical Aesthetics in Frankfurt am Main, Germany, conducted by April Cashin-Garbutt

Have you ever been to a country where you can’t speak the language? When you hear people talking in a language you don’t understand, the sound often appears as a continuous stream – and that’s precisely because they are.

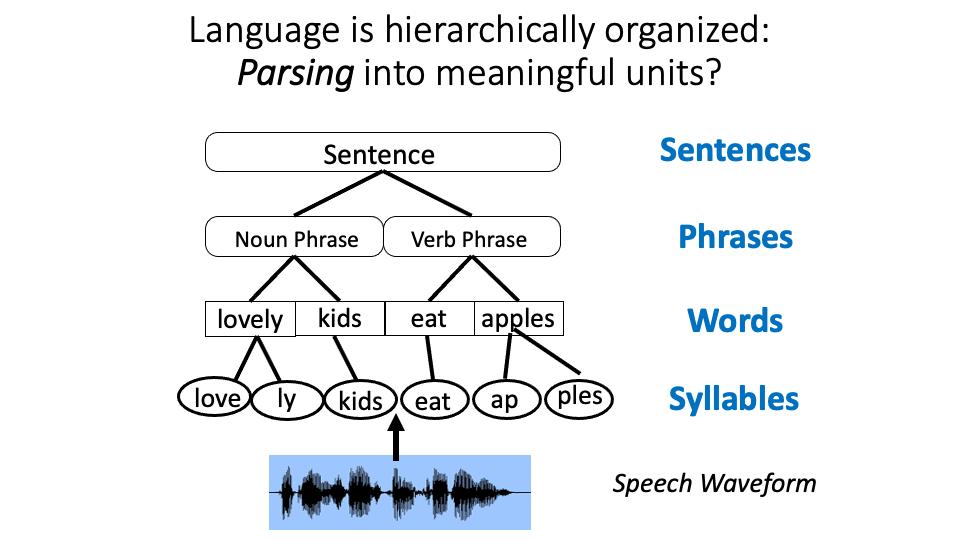

To perceive discrete segments, such as syllables, words and sentences, we must parse continuous sensory input into meaningful units. But how does the brain do this? Recent SWC seminar speaker, Dr Lucia Melloni, explains her research understanding how events structure cognition and memory allowing us to go from continuous streams to segmented units.

In what way does our experience differ from perception in terms of continuity?

A surprising fact is that while our experience is continuous, we perceive it as a series of snapshots or events with a temporal order. One example is speech: when having a conversation, if we know the language, we typically experience it as if there are pauses, or breaks between words and sentences. Yet if one looks into the physical sound waves those pauses do not really exist, and if there are some, oftentimes they don’t demark words or sentences but occur in the middle of the words, which means that they can’t be used as clues to separate words.

How do these snapshots shape our memories and how much is known about the mechanisms by which continuous input is segmented?

The same holds true for our memories, while we experience life in a continuous manner, our memories are composed of snapshots of experience. If somebody asked you – what did you do last Tuesday? – you are very likely to remember, no matter how many other things happened in the days in between. You might remember whether or not you went to the office, or even what you were wearing that day. Not only does your brain remember all these snapshots of experience, but it also remembers the order in which things occur and we are even able to put ourselves into the starting moment of a memory from a particular point in a day and reexperience the moment.

The fact that we are able to do this means we must have ‘made a mark’ at the start of each experience thereby creating snapshots. This is a topic that has fascinated people for decades. Professor Jeff Zacks put forward an idea that we have models in our heads and we create event boundaries every time we cannot predict what is going to happen next.

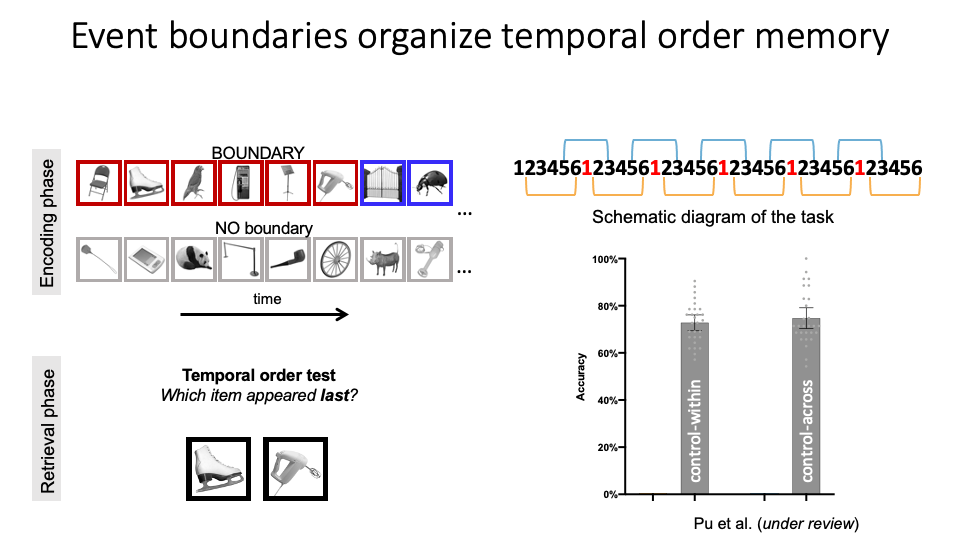

In a similar way in my research, we study for instance how people learn words. We learn how one syllable leads to another and creates a chain, then when the association goes down, we create a boundary, and form a word. We are also studying how those perceptual boundaries affect our memories, and in particular the order in which we remember snapshots of experience within and across event boundaries. While there are lots of hypotheses and people working on these issues, there’s still a lot to learn! For example, how do brain areas get together and which events make up perception?

How did you unravel the mechanisms supporting segmentation and the encoding of sequences?

The first question I had was, what is an event for the mind? One problem in research is that when we ask people to pay attention, that could alter their experience. For example, if you ask someone to listen to the violins when they attend a concert, they can extract it versus if had they not been asked and they were just in the background.

How can we capture these chunks without having to ask people? To answer this question, I made use of language. There have been many years of beautiful research by linguists and psycholinguists demonstrating the building blocks of language. As we know what the building blocks should be – i.e., phonemes, syllables, phrases, sentences – we can capitalize on that and start looking for them in the brain.

Importantly, while most assume that those building blocks of language exist in the mind, how those are put together in the brain is still an open question. We are working on this using invasive and non-invasive techniques in human subjects. Our hope is that by starting from this ‘known’ chunks of the mind, once we answer this question, we can extrapolate to other areas.

What were your key findings?

One of the key findings was that we can determine how much people comprehend language just by looking at their brain activity. In particular, we can track in real time the different building blocks of language as they unravel, for instance the syllables, the phrases and the sentence. We can do that by simply asking subjects to listen to linguistic materials, while collecting the electrical responses of their brain through either non-invasive techniques such as Electroencephalography and also through invasive techniques such as electrocorticography in epilepsy patients. As they listen and comprehend language brain waves track those different units.

We can also use that now to track how people learn those linguistic units. This becomes relevant for evaluating those who aren’t able to tell us themselves, such as babies and animals and adults who do not have overt responses. We developed tools to be able to look at these event segments without explicitly asking subjects to report their understanding. While in its infancy, the tools that we have developed are helping us to understand whether unresponsive patients are able to comprehend language, and even use that information prognostically to predict their recovery.

Were you surprised to find a hierarchical representation of sequences?

In a sense yes, and in a sense not. In language, we know that we have phonemes, syllables, words, phrases, sentences, paragraphs – since we already have this knowledge, we think it is simple. But imagine learning a new language such as Chinese – it is actually very difficult. We also have the same hierarchies of segmentation and narrative in movies. Building hierarchies and capitalising on sequences is something we do all the time. When we listen to music, move around in a city and so forth – it’s ubiquitous. By using the technique we developed we can now ‘see’ those units in real time and even track their acquisition, yet we don’t quite understand how they are built in the brain. We are at the early beginnings.

How was order better preserved and remembered within an event than across an event?

As per Jeff Zacks' work, the hypothesis is that our continuous experiences is parsed into events for the mind, which has several consequences for structuring our memories. Events are created based on models or schemas of what typical situations are e.g., the sequences of actions involved in having dinner at a restaurant. Those schemas, or world situational knowledge, are combined with ongoing experiences to make predictions about what comes next. When something unexpected happens, then an event boundary is created, and memories are separated.

Imagine if we had to remember every single sound, it would be very daunting. However, because we parse and chop it into meaningful units, then we compress it in some sense. One way to overcome our limits of working memory is chunking. If you are struggling to remember a phone number for example, breaking it into smaller chunks allows you to remember it more easily. Yet, an interesting consequence of that chunking mechanism is that remembering the temporal order is easier for things that happen within an event than for things occurring across an event. We could establish that behaviourally and then attempted to explain that through computational modelling.

What are the implications of your research?

The broader goal of my research is to understand how other people experience the world, especially subjects who cannot overtly tell me how they feel such as babies or animals. I have a dream of understanding how animals also feel, not just us as humans. How does it feel to be a bat, a dolphin, or a mouse? How do they experience, segment and remember? It would be amazing but also very important to know that for animal research and making ethical decisions. My hope is that the kind of techniques we are developing will help us capture that, ideally in ways that do not require subject’s explicit responses so we can use those tools to interrogate people who are unresponsive, such as patients in vegetative states.

What is the next piece of the puzzle your research is going to focus on?

I’m just getting started! I would love to crack the question of how does our experience change based on the way we chunk information. I would also like to understand how we translate the very high-definition, rich, perceptual experiences into our memories. We know that we don’t remember everything and so there is a part that is lost, but we do not quite understand how that part is lost. How does this data compression happen – which information is dismissed, or transformed and which stays in the translation between perception and memory?

About Dr Lucia Melloni

Dr Lucia Melloni received her PhD in Psychology from the Catholic University in Santiago, Chile and the Max Planck Institute for Brain Research, Frankfurt, Germany. She is currently a group leader at the Max Planck Institute for Empirical Aesthetics in Frankfurt am Main, Germany and a research professor in the neurology department at New York University Langone Health. Her lab is broadly interested in understanding the neural underpinnings of how we see (perception), how and why we experience what we see (consciousness), and how those experiences get imprinted in our brain (learning and memory) - as well as the interplay between these processes. She uses multiple methods to address these questions, ranging from electrophysiological and neuroimaging methods to behavioral techniques and online surveys.