How to give an eye exam to a mouse

An interview with Dr Cristopher Niell, Associate Professor in Department of Biology at University of Oregon, conducted by Hyewon Kim

For decades, the brain’s visual cortex was often compared to a camera. But recent discoveries have shown that this long-studied area turns up its activity when an animal is running. These findings and technological advances have triggered a movement in the neuroscience field to invest more attention to the effect of naturalistic behaviours on sensory processing. In a recent SWC Virtual Seminar, Dr Cristopher Niell highlighted the need for studying ethologically relevant goal-directed behaviours in light of his research. In this Q&A, he expands on the use of prey capture in mice, as well as the octopus as a promising model organism, to answer such questions.

How do sensory input, behavioural context and on-going brain dynamics interact in natural visual processing?

We often think about vision as looking at a photograph of the world while sitting still. But we, and most other animal species, actually move through the world to figure out what’s out there and what to do. Most of our interaction with the world is moving through it, deciding what to do, and meeting different goals. If we are hungry we may look for a red apple, and if we are scared we may try to avoid things.

Over the past decade, there has been a big appreciation for how much these factors influence visual processing. People tended to think of the early visual areas in the brain as a camera. But a camera is actually very different because our visual system changes how it works depending on what you’re trying to do. A video camera adjusts for light and dark, but it does not know what you’re trying to do.

For us, the big discovery that set that off was recording from awake mice on a running wheel. We had no idea that when the mouse started running, the activity in the visual cortex gets turned up. If you’re thinking of the visual system as a camera, that doesn’t make any sense! But from a behavioural point of view, maybe it does.

One hypothesis is that when a mouse is sitting still, it uses olfactory or other cues such as hearing to guide its actions, but once it starts moving it needs to use vision to know where things are. Vision becomes much more important, so it gets turned up.

There are other hypotheses, like how our movement through the environment could change how we see things. For example, as I’m moving forward, a cup in front of me might look like it’s moving, but my brain knows that it was actually me moving, not the cup. To interpret what is out there in the world, the visual input from outside needs to be combined with what I’m doing at the time. So that’s a big picture reason why all visual processing is related to on-going activity.

The challenge was trying to access this experimentally. For a long time, the ideal scenario for studying vision was to remove all extra variables. The experimenter holds the subject still and presents simple stimuli like bars on a screen – that is very experimentally tractable, but doesn’t match how vision actually works. The only places where you sit still and look at a simple object on a screen tend to be very unnatural - at the optometrist’s office or on Zoom.

Why did you start studying ethologically relevant goal-directed behaviours in addition to trained tasks?

There were two big motivations. Firstly, most people study animals that are held still. The animals are typically on a running wheel where they cannot actually explore their environment. So the vast majority of what our visual system is doing on a daily basis is removed from these experiments. By allowing an animal to run around, all of sudden you get other factors that come into play.

Secondly, we moved specifically toward natural behaviours because most of the time we are using vision to try and do something. There are times in our life where we look at e.g. a picture of the letter ‘A’ and pick out whether a feature is a vertical or horizontal line, but only in certain settings. In particular for a mouse, it wouldn’t sit still in the wild and try to make out whether a visual feature is a vertical or horizontal line. They’re using their vision to do something. So if you really want to study the circuit the way it’s naturally working, then you want to let the animal do what it normally does.

Imagine you’re trying to figure out how a bicycle works but you start with the idea that people use it as a ladder. Sure, you could climb on it and use it as a ladder, but to figure out what the bicycle actually does, you’d want to see somebody use it and you’d see that they actually ride it. If you are studying humans and have them in a functional magnetic resonance imaging (fMRI) scanner, you probably have them doing somewhat normal human things. But the types of tasks we were training mice to do, like discriminate horizontal versus vertical visual patterns, are not what they do naturally.

There are indeed interesting things you can do with those types of tasks, like figure out how mice learn to new perform novel skills or make new types of associations –learning, plasticity, decision-making. But for studying vision and how an animal actually explores and interacts with the world, those traditional tasks were like forcing a square peg into a round hole.

What questions were you trying to answer from mouse studies of prey capture and natural scene encoding during behaviour?

We started to study prey capture as we looked at the range of visual behaviours mice might perform in the wild. Mice actually have poor vision; their eyes are small and they tend to be out at night. I often compare it to taking out my contacts. I can still get around – there are plenty of things that I can do without my contacts. I won’t be able to necessarily read the time on my alarm clock from a distance, but I can still get up and walk down the hallway fine.

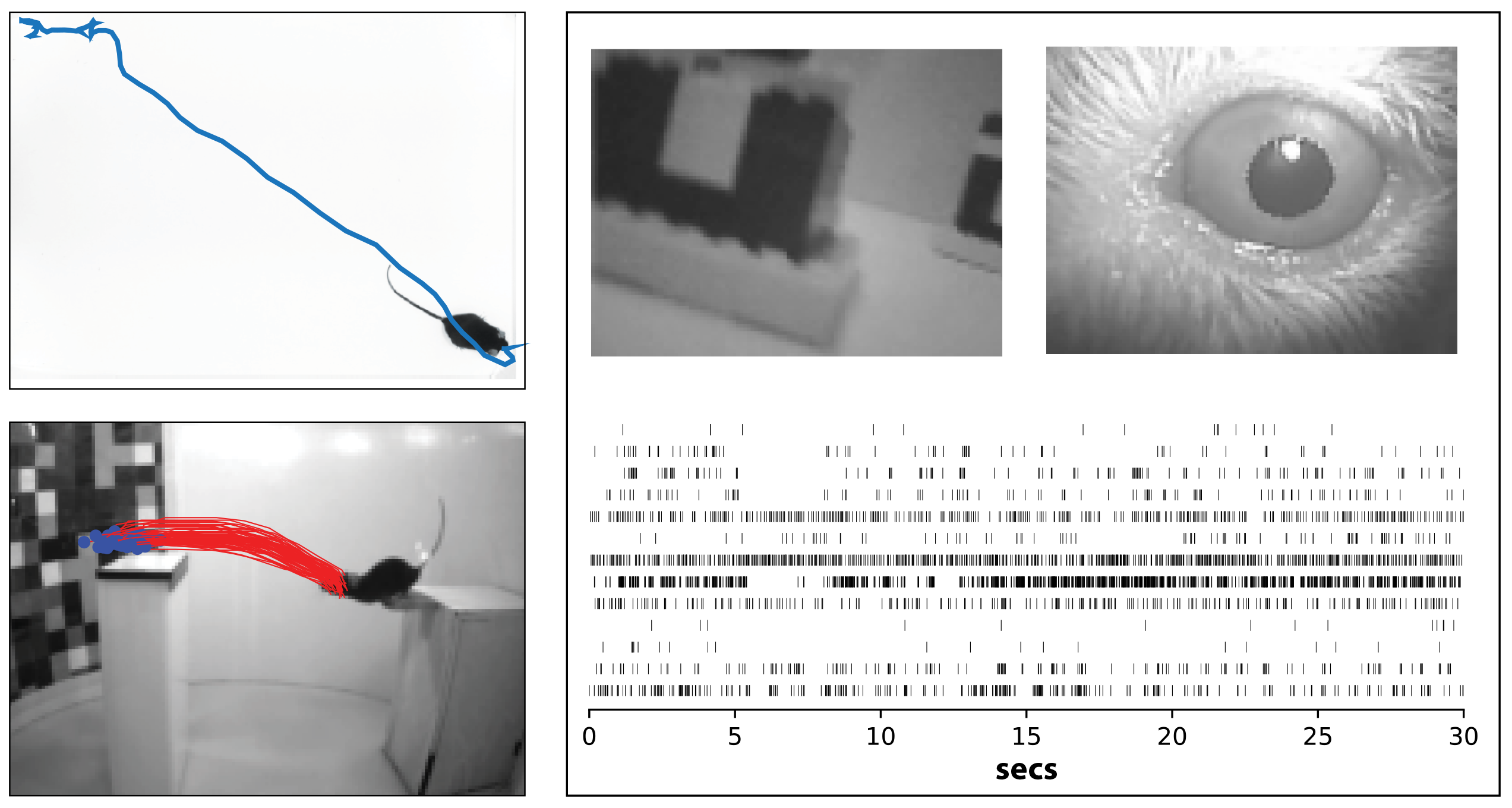

Upper left: Prey capture behavior in mouse. Blue line shows the path of a mouse as it pursues and captures live cricket prey. From Hoy et al, Current Biology 2016.

Lower left: Natural distance estimation behavior in mouse. Red lines show trajectory of a mouse jumping across a gap, using vision to estimate distance. From Parker et al, Biorxiv 2021.

Right: Recording visual responses in a freely moving mouse. Top panels show view of the mouse's eye and visual scene from head-mounted cameras, and lower panels shows spikes from simultaneous silicon probe recording. Niell lab, unpublished.

The question here is: what can the mouse do with its blurry vision? A postdoc in the lab, Jen Hoy, figured out that mice catch crickets, and they use vision to do it. This was surprising at the time, especially for laboratory mice that lived in a cage and had never seen a cricket. We can then get more quantitative and ask how big an object they can detect. Given that a cricket has a fixed size, when is the mouse able to see it as it moves further away or closer up? You cannot give an eye exam to a mouse and ask it to say ‘A’ versus ‘B’, but you can ask whether it sees a cricket or not, by observing whether it chases it.

Studying prey capture also let us see where mice are looking and how they move their eyes. Mice don’t have a fovea like we do, so where do they end up looking? A graduate student in the lab, Angie Michael, answered this question by letting mice hunt crickets while we put a miniature camera, like a GoPro, on its head to see where its eyes are pointed.

For me one of the most insightful findings from prey capture was when we started looking at parts of the brain that mediate this, starting with the superior colliculus. A lot was known about the different cell types of the superior colliculus – what stimuli they respond to, their anatomy, and who is connected to whom – but we didn’t know how this all gets engaged in the process of an animal actually trying to do something.

If we go back to that bike metaphor, you could analyse at all the parts and might guess that the wheel will roll, the pedals will push, etc. But what do people actually do with it? Similarly, we can know what the parts [cells] are in the brain, but when do these actually get engaged? What we went on to show is that some of these cells are involved in detecting whether the cricket is there, and other cells were involved in guiding the mouse’s movement toward the cricket’s location. Those were roles that had been hypothesized, like you could guess the purpose of bike parts. But here we can actually see it in action: what one particular group of cells is doing, versus another group.

How has the octopus been used as a model organism for studying vision in the past?

In the past, the octopus was very understudied compared to other species. There was a lot of work in the 60s and 70s figuring out what kinds of behaviours octopuses can generate. This work also produced beautiful anatomical drawings of cell types in the optic lobe. But then in the 70s and 80s, much of the neuroscience field moved onto other species. So many of the neuroscience tools that allowed us to learn about standard lab species such as mice, primates, and flies, were never applied to the octopus.

One of the most fundamental approaches in visual neuroscience is to record from a neuron and see what it responds to, which is termed its receptive field. For technical reasons, that hadn’t been done in the octopus. So our goal was to apply methods developed in other species over the past 10-20 years, in order to determine how neurons in the octopus brain process visual information.

Oftentimes in neuroscience, and science in general, we are working on a topic that has been studied extensively, and within that we find a particular question that hasn’t been answered yet. So it can feel like filling in the details. Some of these are very important details, but the overall framework is there, so you fit your knowledge into that. But with the octopus, it’s almost completely unknown! It’s so rare in neuroscience to find a problem where so little is known, so I find that fascinating.

How do you foresee the octopus shedding light on neural circuits that produce visually guided behaviour?

Through convergent evolution, octopuses have eyes that are eerily similar to ours. But their brain is completely different from ours. It is often described as alien-like: they evolved independently from us, so nothing looks like anything in our brain. For example, there is no visual cortex in the octopus. Imagine if somebody handed you an alien brain and told you to figure out how it works. It’s that kind of level of mystery. So for me, a big draw was this wide-open, unsolved mystery in a creature that is as fascinating as the octopus.

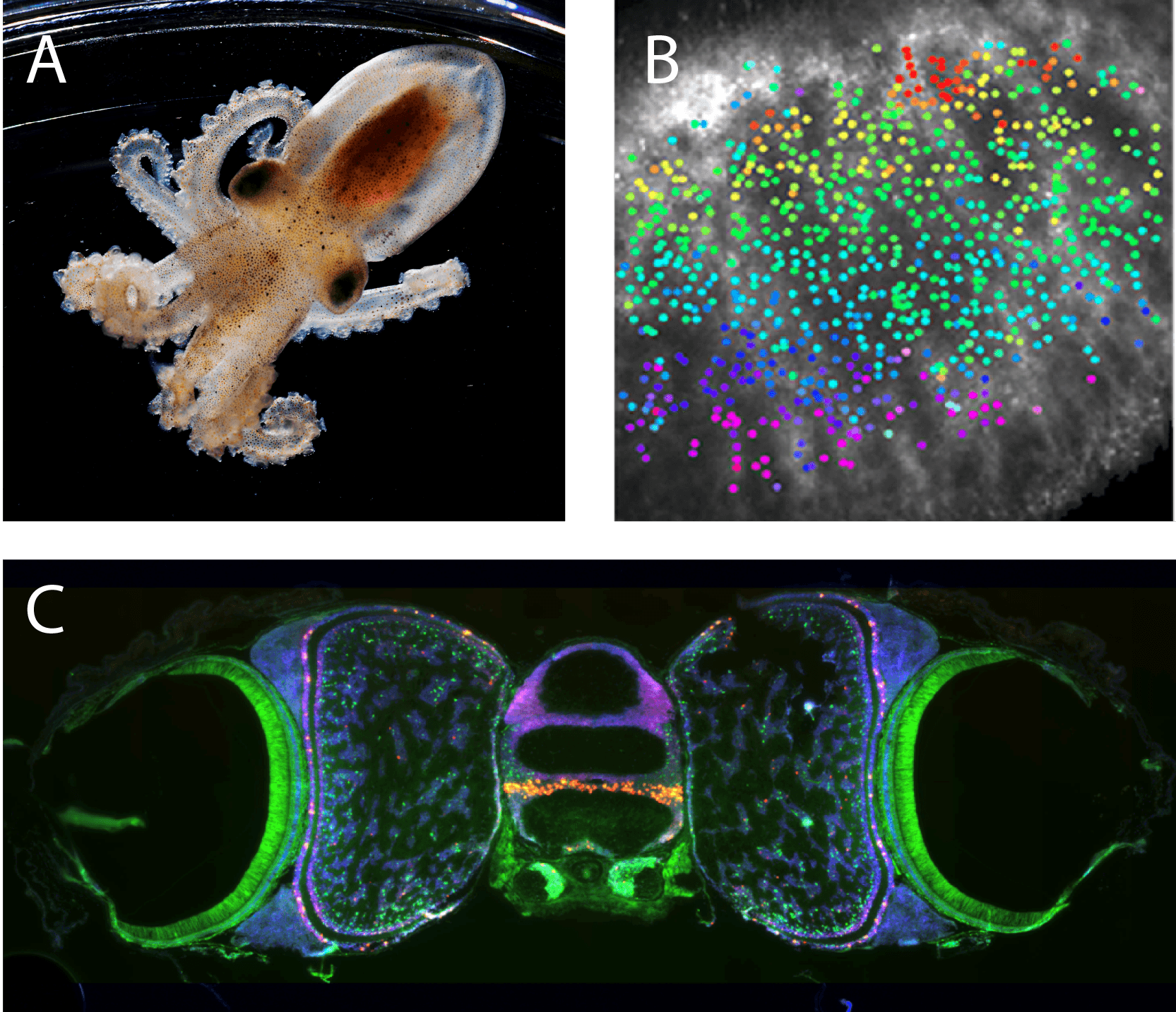

Upper left: Photo of juvenile Octopus Bimaculoides, courtesy of Marine Biological Laboratory.

Upper right: Functional organization of the octopus visual system. Colored points correspond to the location in the visual scene that each neuron responds to , demonstrating a retinotopic map. Niell lab, unpublished.

Bottom: Molecular organization of the octopus visual system. Fluorescent in situ hybridization shows the expression pattern of tyramine beta-hydroxylase (red) and Pax6 (green) along with cell nuclei (blue). Niell lab, unpublished.

There are also some interesting questions from a scientific perspective. Their brains evolved independently, but ended up looking a lot like ours. The question is – their brain may look different, but is it performing similar computations to ours? Did evolution come up with the same computational solution, e.g. running the same programme on a Mac versus a Windows computer? Even though they look different, it may be the same algorithm running under the hood. How does evolution choose the computations (software) and different types of hardware?

On the other hand it’s also possible that they do completely different computations. Particularly because they live underwater and do different types of behaviours, maybe their brains evolved to perform different kinds of computations. A lot of recent advances in machine vision, like using neural networks for facial recognition, are roughly modelled on how our visual system is hierarchically organised. So if you take a completely different organism and figure out how it works, maybe we can come up with different computational foundations to for vision.

What have been some meaningful implications of your research so far?

One of the big implications has been the recognition of different types of signals that go into processing the visual scene, such as the effect of running that I described earlier. We tend to think of different parts of the brain very independently. But the signals that are shared across brain areas could be responsible for some of the big mysteries of how the brain works.

I also don’t want to take credit for all this myself! We filled in one part of this: movement affects vision. But there are lots of other people and together this has created a big trend over the past decade. For example, both Anne Churchland at CHSL and the Carandini/Harris lab at UCL showed that how the mouse’s face is moving is represented all across the cortex. Furthermore, Karl Deisseroth’s lab at Stanford showed that it is possible to tell whether the mouse is thirsty or not almost anywhere in the brain you record. Before, we would have thought there must be a thirst centre in the brain that keeps track of thirst. But all over the brain, you can find signals for whether the mouse is thirsty. So it fits into this overall trend that a lot of signals are shared across multiple parts of the brain. That shifts the way we think about processing.

What is the next piece of the puzzle your research is going to focus on?

We’re recognising that many of these signals are present throughout the brain, but the next step forward is to understand how they get used and what their computational role is. That is the next big question for me, and where a lot of the approaches we try to develop are aimed toward.

If you want to figure out how these things come together, you need to let the animal do something that brings them together in a meaningful way. Having an animal held still while it is trying to move will make that much harder. So now that we have natural behaviours set up and methods to record in freely moving mice, our next direction is figuring out the computations: when a movement signal comes into a visual area, how does it get combined with the visual processing, and how does this facilitate behaviour?

It was great visiting SWC since a lot of what we are doing is very similar to what’s being done there. The researchers there are my close colleagues, and they’re already thinking about many of the same problems in similar ways.

About Dr Cristopher Niell

Cristopher Niell received his B.S. in physics at Stanford University, doing research in single-molecule biophysics with Dr. Steven Chu. He then remained at Stanford to obtain his PhD with Dr Stephen Smith, studying the development and function of the zebrafish visual system. He then moved to UCSF to perform post-doctoral study with Dr. Michael Stryker, where he initiated studies of visual processing and behavioural state in the mouse cortex. He established his lab at University of Oregon in 2011, where he is now an Associate Professor in the Department of Biology and Institute of Neuroscience. His lab uses a combination of neural recording and imaging methods, operant and ethological behaviours, and computational analysis to study the neural circuits that underlie visually guided behaviour and perception.