Scaling up and standardizing neuronal recordings to build a Brain Observatory

An interview with Jérôme Lecoq, Ph.D., Allen Institute, conducted by April Cashin-Garbutt, MA (Cantab)

Jérôme Lecoq, Ph.D., Senior Manager, Optical Physiology, Allen Institute, recently gave a seminar at the Sainsbury Wellcome Centre on large-scale calcium imaging and building standardized, open brain observatories. I spoke with Jérôme to find out more about the Brain Observatory, how technical developments have helped to scale up neuronal recordings, and to find out his views on standardization and the future of neuroscience.

Can you please give a brief introduction to the Brain Observatory of the mind and explain the vision behind this project?

The Brain Observatory project started a couple of years ago when we realised that our existing datasets about connectivity, cell morphology, electrophysiological properties and classifications about cortical cell types were insufficient to build models of the cortex. We needed data sets on functional activity in vivo, i.e. how cells perform tasks in an animal, and so we set ourselves up to build a platform to record the activity of cortical cells in vivo.

At the beginning our goal was to map the functional properties of cells across the visual cortex, but our vision for the Brain Observatory is also to leverage this high throughput platform to test different hypotheses on cortical function via more targeted experiments.

How have recent technical developments at Stanford and the Allen Institute helped to scale up neuronal recordings?

At Stanford we achieved a couple of improvements on existing technologies. Firstly, we showed a path to image multiple brain areas simultaneously using two-photon imaging. Since then, similar approaches have been used by other labs, so now several places have access to multi-area imaging. Relatedly, we have also extended the field size of current microscopes.

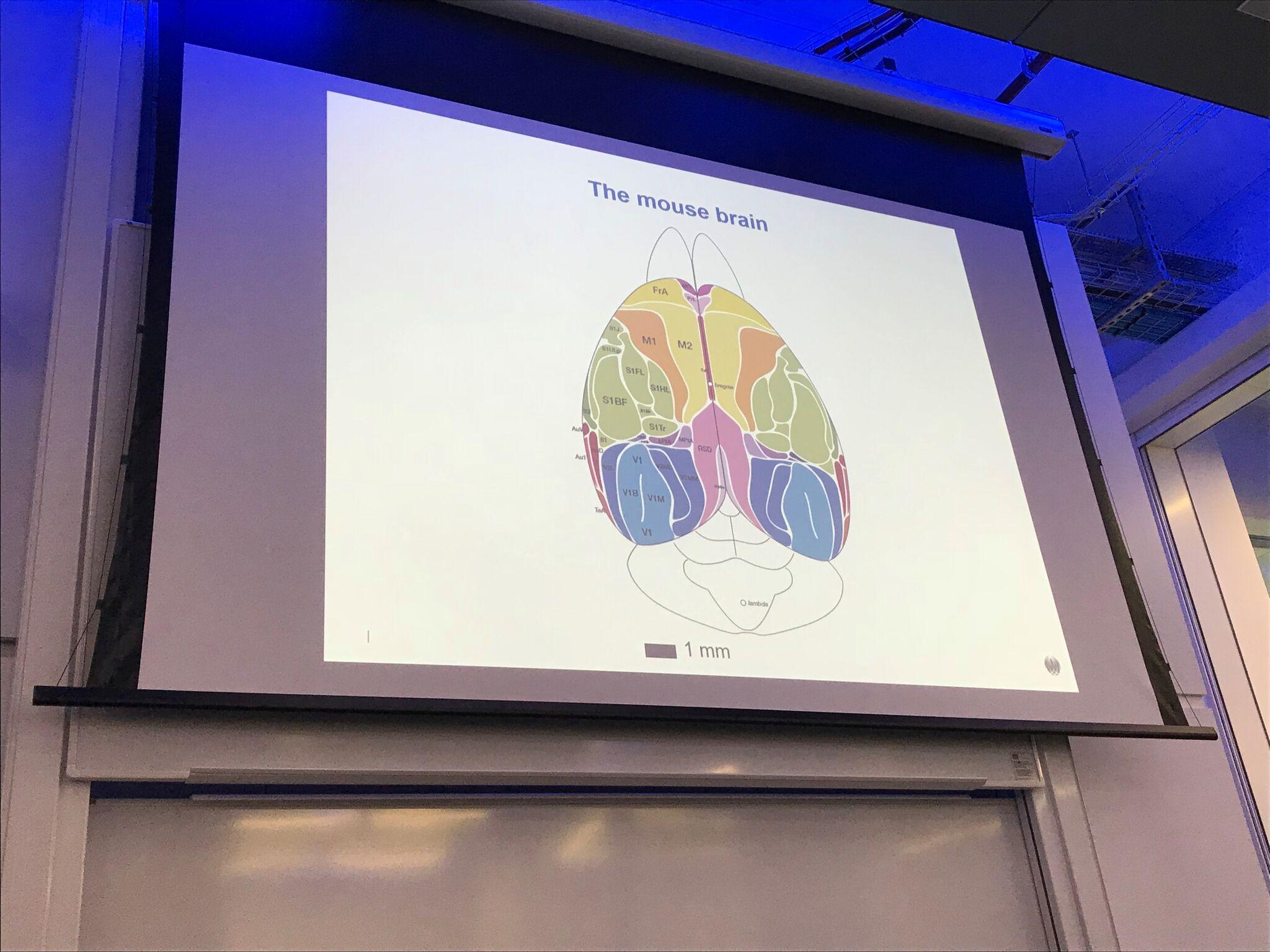

We have also demonstrated surgical improvements, for example, we can now open-up the entire dorsal surface of the skull so that you can choose any neurons you want to record from the entire dorsal surface of a mouse brain.

My opinion is that neuroscience is in a place where we now have very efficient tools and it is now a matter of using them. Although a lot of people are still focussed on improving the tools, we have made a lot of progress in the last decade.

Why is it important to standardize neuronal recordings for in vivo high experimental throughput?

There are multiple reasons for standardizing neuronal recordings. The first is that it is difficult to train new experimentalists to do recordings, so standardizing will make experiments more efficient and more reproducible across people. As neuronal recordings are very technical, standardization will ensure people performing the same experiment do it the same way.

There is also a generic problem in neuroscience where experiments cannot be reproduced between labs and it is not always clear why. At the Allen Institute, we take extra-care to document our processes so that anyone could take our procedures and replicate them, and we are hopeful this will limit such variability.

Can you please explain how you have leveraged the Brain Observatory to survey cortical activity?

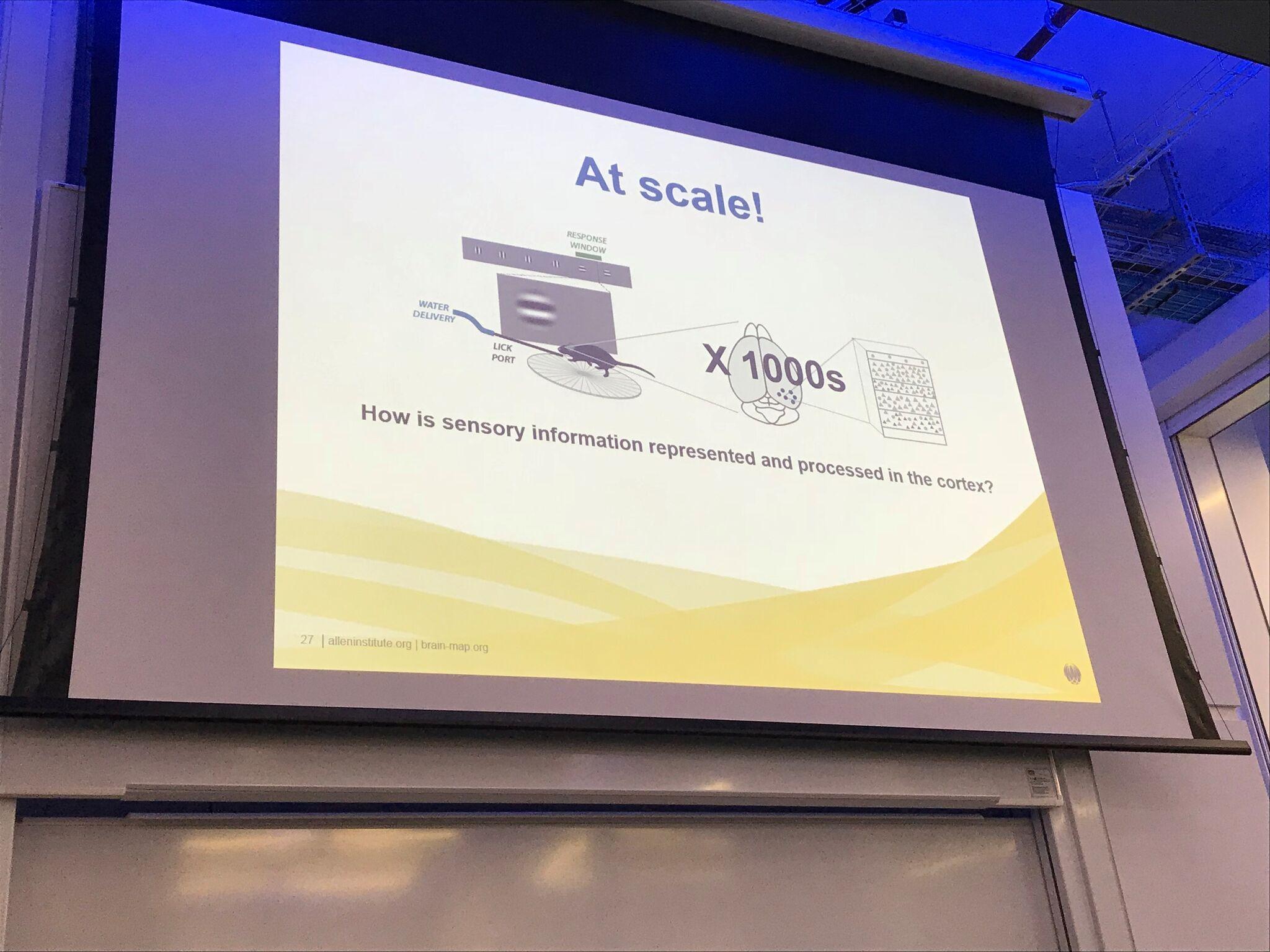

We were interested in visually evoked activity in the cortex of mice, so we designed a standardized set of visual stimuli so as to look at the distributions of these cellular responses across visual areas, cortical layers and neuronal populations. The Brain Observatory allowed us to scale this as we can process many more experiments than is typical.

How will the Brain Observatory help neuroscientists answer age-old questions like what are the roles of the different cortical layers and how do multiple brain areas collaborate to bring about cortical computation?

If you want to understand how a brain area works, you have to compare it to different areas. What we provide is a dataset that, in a very systematic fashion, records the activity of many neurons in all layers in all the areas. Now you can dive into the data and compare all the responses.

Our hope is that this data set will allow us and researchers across the field to design more targeted experiments that dive deeper into these phenomenon. For example, if you see a difference between different cell types, then you can try to be more mechanistic and dive deeper using optogenetic experiments and so forth.

What is overfitting and why is it such a problem in neuroscience? How do you hope to alleviate the problem of overfitting?

Because neuroscience experiments have traditionally been very difficult, for a long time people would collect 5 or 10 experiments and try to fit a model. The problem of overfitting would arise, in the sense that they did not have enough data to make sure that their model generalised. And so, new experiments would show their model to be wrong.

Because we can now scale-up recordings, people can do more sophisticated machine-learning approaches and divide the data set into sub-parts and make sure that it generalises well. Providing enough data allows people to use approaches that avoid cherry-picking and creating biases.

Will you take steps to manage statistical issues such as multiple query bias?

If you test hypotheses or models, you need to use appropriate statistical tools and divide your data, test and do multi-fold validation and so forth to make sure you don’t overfit. In addition, if you test multiple hypothesis simultaneously, you should correct your p-values appropriately.

What do you think the future holds for the Brain Observatory?

In the future we are going to run more targeted experiments based on the data sets we have collected to dive deeper into more precise responses to visual stimuli. An aspect I’m really excited about is a model called OpenScope, where scientists across the field could propose experiments based on their interests or analyses they have done on our data and then we run the experiment on the observatory.

This model would provide an accelerated path to test a hypothesis that people are proposing, because we have such high-throughput capabilities to run experiments now. Really this is a social experiment.

How do you intend to manage the large collaborations and data sets of the Brain Observatory? Do you envision a model where very large groups publish papers like in physics/astronomy?

Yes, that’s how the Allen Institute works. We typically have a very large platform paper where all the authors that contributed are listed.

Do you plan to leverage preprint servers and will the data be open access?

Yes, this is something we are already doing. For example, we posted a pre-print recently. The field is now moving very fast in that direction, we need to encourage people to do so.

Our data has always been open access and is even open access before anything is published!

About Jérôme Lecoq, Ph.D.

Jérôme joined the Allen Institute in 2015 to lead efforts in mapping cortical computation using in vivo two-photon microscopy in behaving animals.

Prior to joining the Allen Institute, Lecoq was a postdoctoral scholar in Mark Schnitzer’s group at Stanford University, where he developed novel imaging methodologies to monitor large neuronal populations in the visual cortex of behaving mice.

Lecoq received a Ph.D. in Neuroscience from the Pierre and Marie Curie University in Paris and an M.S. in Physics from ESPCI ParisTech, a French multidisciplinary engineering school.

For more information on Dr. Jérôme Lecoq’s SWC seminar please visit: SWC Website